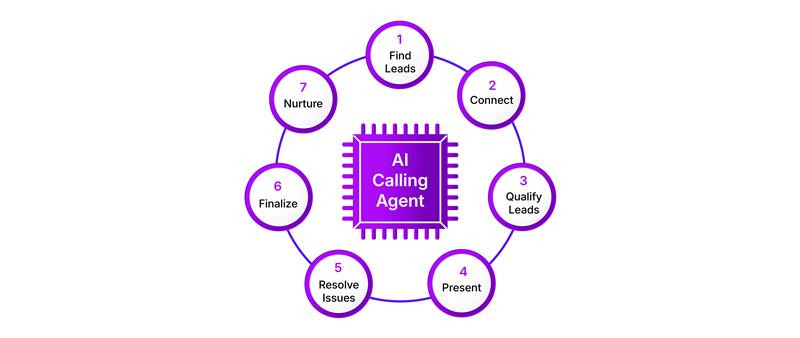

Automating cold calling with AI to address B2B sales objections

Cold calling objections are inevitable. And increasingly expensive. They happen when prospects end conversations before you can demonstrate value. The catch is – these objections often aren’t valid rejections. They’re automatic responses designed to end uncomfortable interruptions. Most sales reps don’t know how to handle this. They treat “We’re not interested” as a hard no […]

Automating cold calling with AI to address B2B sales objections Read More »