Agentic AI is having its moment. It’s making decisions, taking actions, and collaborating with your tools.

Suddenly, your team has tools that can browse the web, write a blog post, fill out a spreadsheet, book a meeting, make calls, and update CRM.

Impressive? Absolutely.

But as with any emerging tech, there’s a gap between what it is and what people think it is. We’ve seen teams get excited about agentic AI and then hit a wall. Not because the tech isn’t real. But because the expectations were off.

Let’s break down the common misconceptions about agentic AI.

What people think agentic AI is versus what it actually is.

1. Giving agents unchecked autonomy may lead to systemic instability

Not if they’re built right.

Modern agents don’t just “think” and go. They operate within controlled environments:

- Scoped API access

- Pre-defined task goals

- Sandboxed execution

- Human-in-the-loop approvals

They’re powered by large language models (LLMs), yes! But they’re orchestrated through agent frameworks like LangChain, CrewAI, or OpenAI’s function calling. These frameworks let you tightly define what tools the agent can use, what steps it can take, and what counts as success.

So, if an agent schedules a wrong call or loops endlessly, it’s not because it’s out of control. It is because the task wasn’t scoped clearly.

AI agents are governed and constrained through three mechanisms: the prompt structure, the memory model, and the toolchain access.

Therefore, start small. Monitor closely. And build guardrails early into your design to ensure safety and maintain control as complexity grows.

2. Agentic AI is just a smarter chatbot

On the surface, it feels like a fair comparison because LLMs are behind both. But the architecture is fundamentally different.

Chatbots are reactive. They wait for input, then generate output.

However, AI agents are inherently proactive. For example, if their goal is to manage inbound support calls to resolve technical issues, they autonomously deconstruct it into subtasks. Identify the caller’s intent, retrieve relevant solutions, guide the customer step by step, and escalate when needed.

That means agents need:

- Planning capabilities (task decomposition)

- Tool use (APIs, browsers, CRMs)

- Short-term memory or state management

- Autonomy to decide next steps

Think of it this way: if ChatGPT is your intern explaining how to do something, an agent is the intern who actually does it. All with access to your tools and data, but within limits.

It’s the difference between suggestion and execution.

3. The risks are high; better to wait for the tech to mature

Understandable.

But here’s the catch: the longer you wait, the further ahead your competitors get.

And you don’t need to start with high-risk, high-stakes workflows.

Smart teams start with low-complexity, high-volume tasks, including:

- Drafting internal updates

- Handling inbound/outbound calls

- Categorizing leads

- Summarizing customer calls

You can set up agents with approval steps, limited permissions, and real-time logging. And tools like Rewind AI, AutoGen Studio, and OpenAgents offer visual debugging, so it is clear what decisions were made and why.

Waiting doesn’t reduce risk. It just increases your learning curve later.

4. AI agents’ actions aren't always transparent or predictable

Only when you don’t design for traceability.

By default, LLMs are probabilistic. It means they generate responses based on token prediction, not logic trees. This is where the fear of “unpredictability” comes from.

But LLMs are structured by agent frameworks using deterministic workflows, which include:

- Decision nodes

- Tool selectors

- Response validators

- Retry handlers

With these in place, you can debug an agent like you’d debug a workflow. Want to know why the agent skipped an inbound call or presented the data in a certain way? Just check the log.

It is simple, transparent, auditable automation.

5. It’s another hype cycle (remember NFTs?)

We hear this one a lot. The difference? NFTs (Non-Fungible Tokens) didn’t automate 40% of your workflow.

Agentic AI is part of a broader vision. It has initiated a shift from human-triggered automation to goal-driven systems that act with minimal input.

And this isn’t theoretical. Companies are already using agents to:

- Monitor competitors and send alerts

- Draft personalized prospecting emails

- Handle inbound and outbound calls

- Manage first-line customer support

- Clean and enrich CRM records

The tools are early, yes! But the value is real, and it is the infrastructure layer for the next generation of work.

6. AI agents are exclusively accessible to large enterprises

That used to be true. Not anymore.

Open-source frameworks like LangGraph, CrewAI, or AutoGen mean you don’t need a huge engineering team to get started.

And platforms like Dust, Zapier AI, and Hex make it drag-and-drop simple to deploy agents across internal workflows.

Costs have shifted from “we need a research lab” to “can we spare a week to pilot this?”

Small teams are using agents to save hours per week on things like:

- RFP response prep

- Sales call analysis

- SEO research

- Internal knowledge retrieval

You don’t need scale to start. You just need a process worth automating.

7. AI agents are either fully autonomous or nothing

One of the most dangerous myths.

Agentic AI isn’t a binary between full autonomy and manual control. It operates along a spectrum, enabling dynamic collaboration between humans and machines. Humans can delegate goals while retaining oversight, intervention, and strategic direction.

Most high-performing agents today run in co-pilot mode:

- The agent drafts → a human reviews

- The agent suggests → a human approves

- The agent retries → a human supervises

AI agents can operate at varying levels of independence. This ranges from executing predefined tasks to making real-time decisions with minimal oversight.

Design choices like control flow, human-in-the-loop mechanisms, and confidence thresholds define where an agent sits on that spectrum.

You can always start with assistive agents, then turn up the autonomy as confidence grows.

8. AI agents are neutral and immune to manipulation

They’re not. They can be tricked, misled, or steered in unintended directions.

For example:

- Prompt injections can sneak in malicious instructions

- Reward hacking can cause agents to optimize for metrics that don’t align with business outcomes

- Bias in training data can influence how agents rank or prioritize results

The solution?

- Use output filtering and response validators

- Audit logs for anomalous behavior

- Introduce human-in-the-loop checkpoints for sensitive tasks

Good design prevents most issues. But assuming agents are foolproof is the quickest way to set system up for failure.

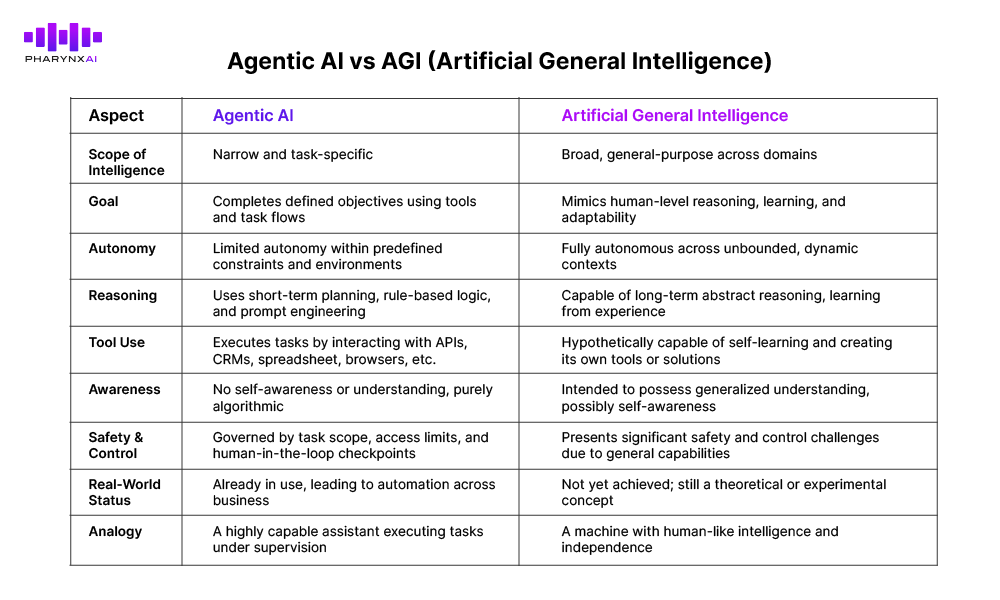

9. Agentic AI = AGI (Artificial General Intelligence)

No. Agentic AI is not going to take over the world.

And let’s clarify: Agentic AI is not Artificial General Intelligence.

It doesn’t “understand” like a human. It doesn’t reason deeply or generate big ideas from scratch. It follows task flows, reasons through short-term memory, and completes defined objectives.

It is:

- Goal-oriented

- Tool-using

- Narrowly capable

This is software designed to perform tasks, not to replicate human consciousness. Let’s not mistake functional intelligence, such as pattern recognition or task automation, for true understanding or sentience.

In true sense, this “intelligence” is bounded by algorithms and data, lacking awareness or emotions.

10. Replacing old automation systems with intelligent agents for efficiency

Tempting… but not smart.

Agents are great for tasks that require flexibility, judgment, or language processing.

But if your workflow is rule-based, predictable, and fast, traditional automation still wins on speed and cost.

For example:

An AI agent is highly effective when dealing with complex, unstructured tasks, like reading through a messy PDF, extracting key information, and drafting a clear, professional email based on that content. This requires understanding, summarization, and generation, which agents handle well.

On the other hand, using an AI agent for simple, repetitive tasks, such as copying a form entry into Airtable, is unnecessary and inefficient. Such tasks are straightforward and better suited for basic automation or scripts rather than a sophisticated agent.

Use agents where logic needs to bend. Keep automations where logic is fixed.

Closing the loop

Agentic AI isn’t about replacing humans.

With agentic AI, we build systems that can take high-level goals and handle the messy middle, such as working across tools, making decisions in context, and adapting as they go. They move faster, require less step-by-step input, and improve over time.

But like any powerful tool, their value lies in how they’re implemented.

Success depends on how teams experiment, learn, and adapt. The most effective teams are testing early, scoping wisely, and evolving faster than their competitors.

Agentic AI is already changing how work gets done.

Are you ready to co-create the future of work with agentic AI?